The attraction of AI is that it is learns by experience. All learning requires feedback, whether its an animal, a human or a computer doing the learning, The learning-entity needs to explore its environment in order to try different behaviors, create experiences and then learn from them.

For computers to learn about computer-based environments is relatively easy. Based on a set of instructions, a computer can be trained to learn about code that it is executing or is being executed by another computer. The backbone of the internet uses something to similar to ensure data gets from point A to point B.

For humans to learn about human-environments, this is also easier. It is what we have been doing for tens of thousands of years.

For humans to learn about computer-based environments is hard. We need a system to translate from one domain into another. Then we need a separate system to interpret what we have translated. We call this computer programming, and because we designed the computer, we have a bounded-system. There is still a lot to understand, but it is finite and we know the edges of the system, since we created it.

It is much harder for computers to learn about human environments. The computer must translate real-world (human) environment data into its own environment, and then the computer needs to decode this information and interpret what it means. Because the computer didn't design our world it doesn't have the advantage that humans do when we learning about computers. It also doesn't know if it is bounded-system or not. For all the computer knows, the human-world is infinite and unbounded - which it could well be.

In the short term, to make this learning feasible we use human input. Human's help train computers to learn about the real-world environments. I think of the reasons that driver-less car technology is being focused on, is that the road system is a finite system (essentially its 2D) that is governed by a set of rules.

•Don't drive into anything, except a parking spot.

•Be considerate to other drivers, e.g. take turns at 4-way stop signs.

•Be considerate to pedestrians and cyclists.

•etc

This combination of elements and rules makes it a perfect environment to train computers to learn to drive, not so much Artificial Intelligence but Human-Assisted Intelligence. Once we have trained a computer to decode the signals from this real-world environment and make sensible decisions with good outcomes, we can then apply this learning to different domains that have more variability in them, such as delivering mail and parcels.

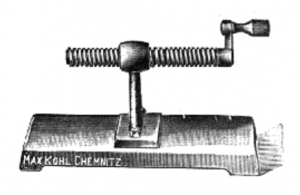

This is very similar to the role of the machine screw in the industrial revolution. Once we had produced the first screw, we could then make a machine that could produce more accurate screws. The more accurate the screw, the more precise the machine, the smaller tolerance of components it could produce, the better the end-machine. Without the machine screw, there would have been no machine age.

This could open the doors to more advanced AI, it is some way off though because time required to train computers to learn about different domains.