During an interview between Shane Parrish and Daniel Kahneman, one of the many interesting comments made was around how to make better decisions. Kahneman said that despite studying decision-making for many years, he was still prone to his own biases. Knowing about your biases doesn't make them any easier to overcome.

His recommendation to avoid bias in your decision making is to devolve as many decisions as you can to an algorithm. Translating what he is saying to analytical and statistical jobs suggests that no matter how hard we try, we always approach analysis with biases that are hard to overcome. Sometimes our own personal biases are exaggerated by external incentive models. Whether you are evaluating your bosses latest pet idea, or writing a research report for a paying client, delivering the wrong message can be costly, even if it is the right thing to do.

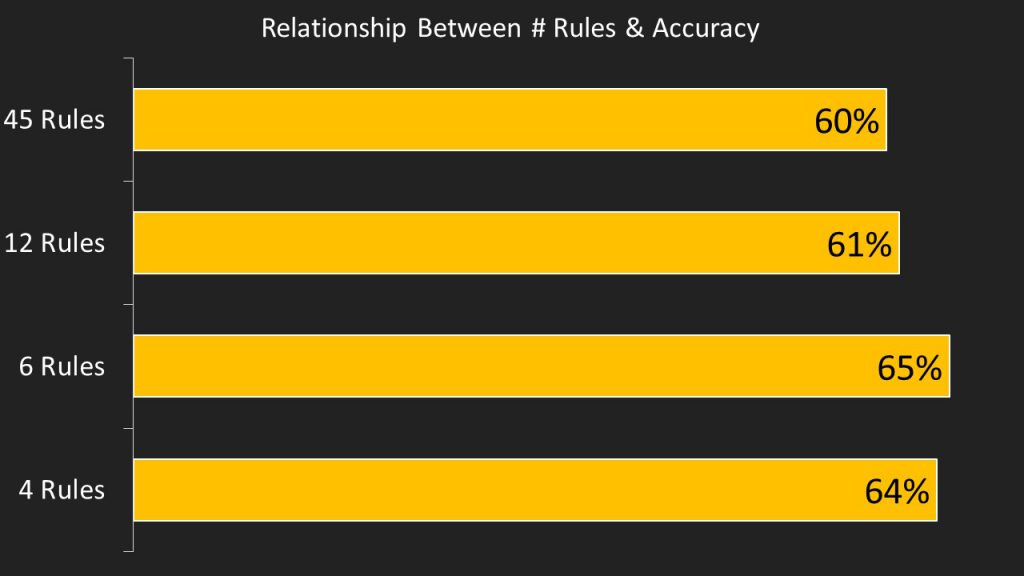

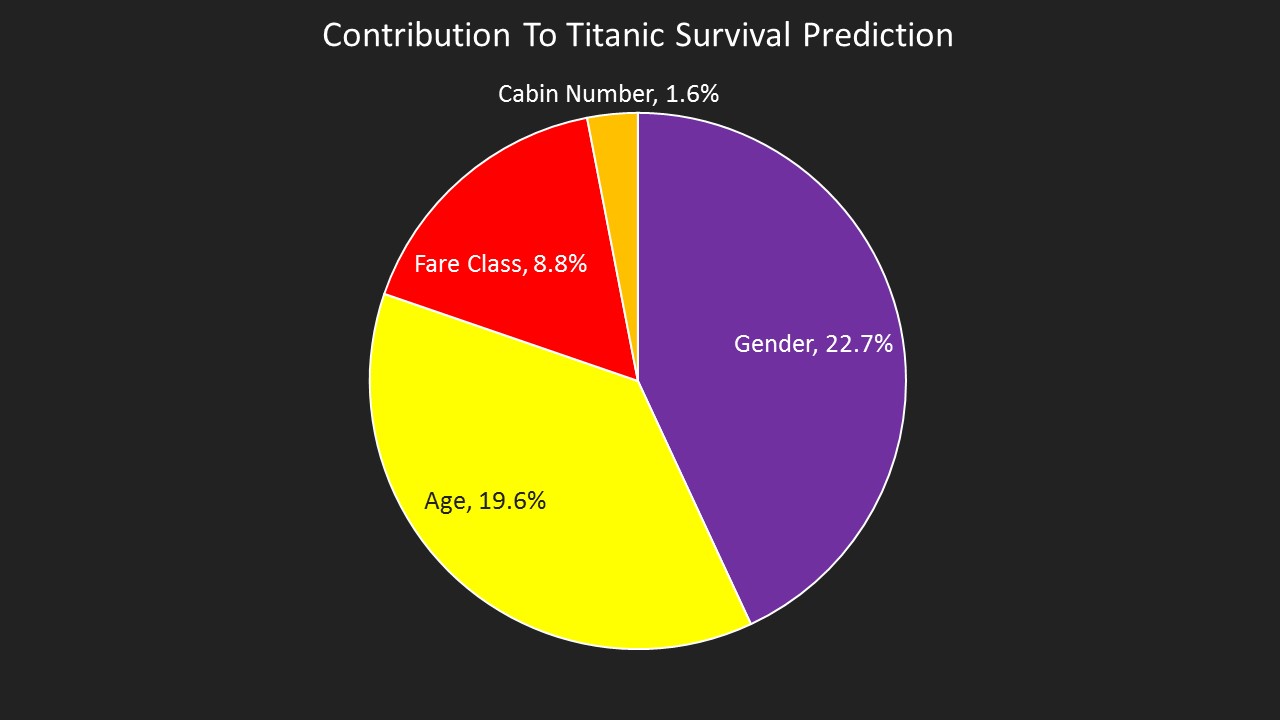

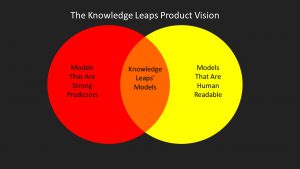

Knowledge Leaps has an answer. We have built two useful tools to overcome human bias in analysis. The first is a narrative builder that can be applied to any dataset to identify the objective narrative captured in the data. Our toolkit can surface a narrative without introducing human biases.

The second tool we built removes bias by using many pairs of eyes to analyze data and average out any potential analytical bias. Instead of a single human (i.e. bias prone analyst) looking at a data set our tool lets lots of people look at it simultaneously and share their individual interpretation of the data. Across many analysts, this tool will remove bias through collaboration and transparency.

Get in touch to learn more. doug@knowledgeleaps.com.