Noodling on the internet I read this paper (Integrating UNIX Shell In A Web Browser). While it is written 18 years ago, it comes to a conclusion that is hard to argue with: Graphical User Interfaces slow work processes.

The authors claim that GUI slow us down because they require a human to interact with them. In building a GUI-led data analytics application I am inclined to agree — the time and cost associated with development of GUIs increases with simplification.

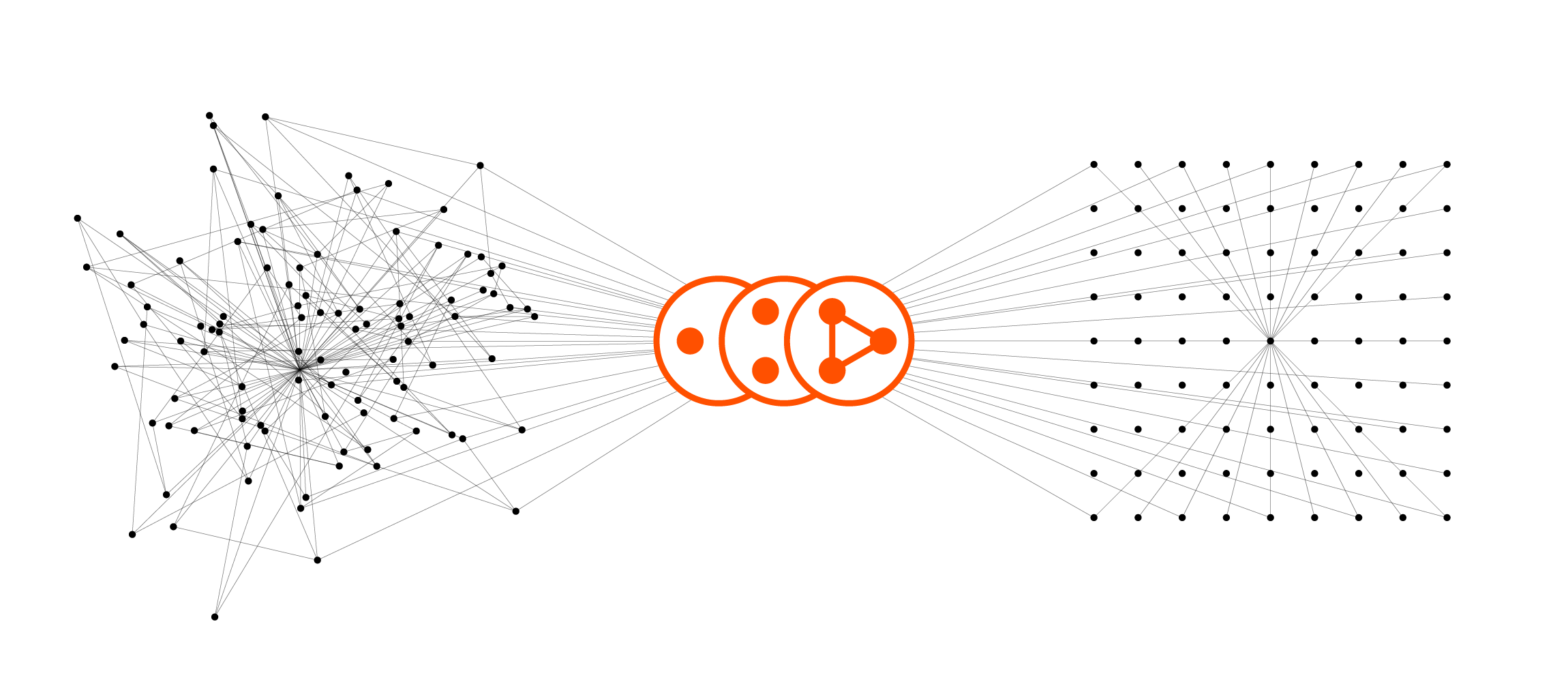

To that end we are creating a programming language for data engineering on our platform. Our working title for the language is wrangle (WRANgling Data Language). It will support ~20 data engineering functions (e.g., filter, mapping, transforming) and the ability to string commands together to perform more complex data engineering.

Excerpt from paper: "The transition from command-line interfaces to graphical interfaces carries with it a significant cost. In the Unix shell, for example, programs accept plain text as input and generate plain text as output. This makes it easy to write scripts that automate user interaction. An expert Unix user can create sophisticated programs on the spur of the moment, by hooking together simpler programs with pipelines and command substitution. For example:

kill `ps ax | grep xterm | awk '{print $1;}'`

This command uses ps to list information about running processes, grep to find just the xterm processes, awk to select just the process identifiers, and finally kill to kill those processes.

These capabilities are lost in the transition to a graphical user interface (GUI). GUI programs accept mouse clicks and keystrokes as input and generate raster graphics as output. Automating graphical interfaces is hard, unfortunately, because mouse clicks and pixels are too low-level for effective automation and interprocess communication."